In our previous blog post on Value Iteration & Q-learning, we introduced the Markov Decision Process (MDP) as a helpful model for describing the world, and we described how a robot could apply Reinforcement Learning (RL) techniques to learn a policy, $\pi: S \rightarrow A$, that maps the state of the world, $s \in S$, to the action the robot should take, $a \in A$. The goal of RL is to learn the optimal policy, $\pi^*$, that maximizes the expected, future, discounted reward (or return), $V^{\pi}(s)$, the agent receives when starting in state, $s$, and following policy, $\pi$ as given by the equation below.

\begin{align}

\pi^* = \text{arg}\max\limits_{\pi \in \Pi} V^{\pi}(s) = \text{arg}\max\limits_{\pi \in \Pi} \sum\limits_{s’}T(s,\pi(s),s’)[R(s,\pi(a|s),s’) + \gamma V_{\pi}(s’)]

\end{align}

We note that, in the tabular setting (e.g., for value and policy iteration, where one uses a look-up table to store information), the policy is deterministic (i.e, $\pi: S \rightarrow A$). However, when we move to deep learning-based representations of the policy (see the section on Policy Gradient below), the policy typically represents a policy distribution over actions the robot would sample from for acting in the world (i.e., $\pi: S \rightarrow [0,1]^{|A|}$).

To find the optimal policy, we described two approaches in our previous post (i.e., Value Iteration & Q-learning). Value iteration computes the optimal value function, $V^{*}(s)$, from which one can find the optimal policy given by $\pi^*(s) = \text{arg}\max\limits_{a \in A} \sum\limits_{s’}T(s,a,s’)[R(s,\pi(a|s),s’) + \gamma V^{*}(s’)]$. We also introduced Q-learning, in which one can extract the optimal policy by $\pi^*(s) = \text{arg}\max\limits_{a \in A} Q^*(s,a)$, where $Q^*$ is the optimal Q-function. Finally, we extended Q-learning to Deep Q-learning where the Q-function is represented as a neural network rather than storing the Q-values for each state-action pair in a look-up table.

In this blog post, we will follow a similar procedure for two new concepts: (1) Policy Iteration and (2) Policy Gradients (and REINFORCE). Like value iteration, policy iteration is a tabular method for reinforcement learning. Similar to Deep Q-learning, policy gradients are a function approximation-based RL method.

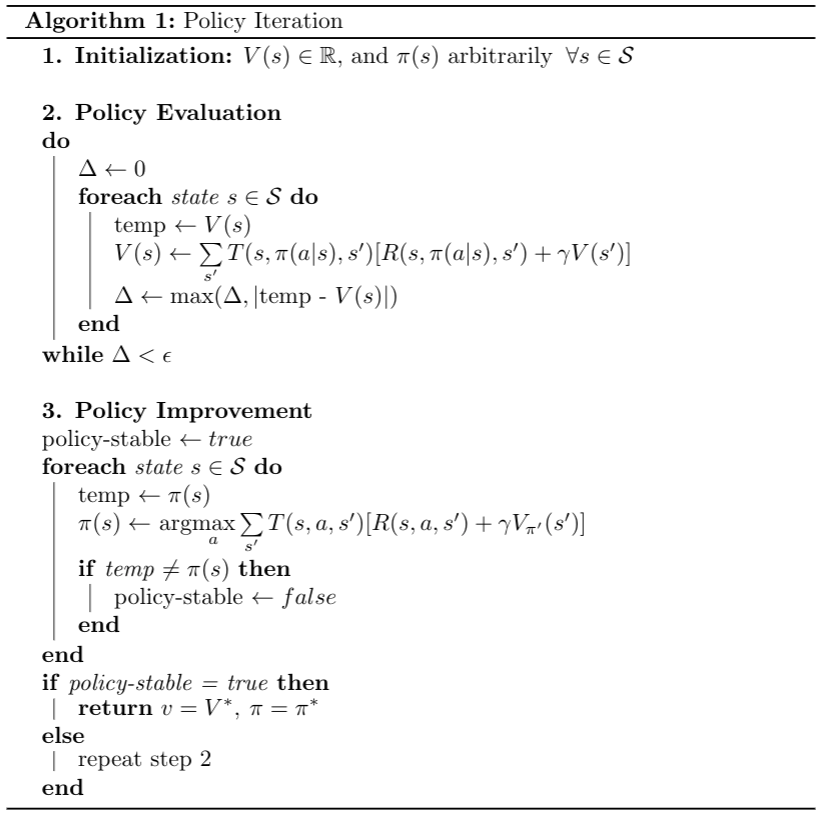

Policy Iteration

The pseudocode for policy iteration is given in Figure 1. At a high level, this algorithm first initializes a value function, $V(s)$, and a policy, $\pi(s)$, which are almost surely incorrect. That’s okay! The next two steps, (2) Policy Evaluation and (3) Policy Improvement, work by iteratively correcting the value function given the policy, correcting the policy given the value function, correcting the value function given the policy, and so forth. Let’s break down the policy evaluation and policy improvement steps.

Policy Evaluation

Policy evaluation involves computing the value function, $V^{\pi}(s)$, which we know how to do from our previous lesson on value iteration.

\begin{align}

V^\pi(s) = \sum\limits_{s’}T(s,\pi(s),s’)[R(s,\pi(s),s’) + \gamma V_{\pi}(s’)]

\end{align}

In policy iteration, the initial value function is chosen arbitrarily, (can be all zeroes, except at the terminal state – which will be the episode reward), and each successive approximation is computed using the following update rule:

\begin{align}

V_{k+1}(s) \: = \: \sum\limits_{s’}T(s,\pi(s),s’)[R(s,\pi(a|s),s’) + \gamma V_{k}(s’)]

\label{Bellman}

\end{align}

We keep updating the value function for the current policy using equation \ref{Bellman} until it converges (i.e., no state value is updated more than $\Delta$ during the previous iteration.

A key benefit of the policy evaluation step is that the DO-WHILE loop can exactly be solved as a linear program (LP)! We do not actually need to use dynamic programming to iteratively estimate the value function! Linear programs are incredibly fast, enabling us to quickly, efficiently find the value of our current policy. We contrast this ability with Value Iteration in which a $\max$ operator is required in the equation $V(s) = \text{arg}\max\limits_{a \in A} \sum\limits_{s’}T(s,a,s’)[R(s,a,s’) + \gamma V_{\pi}(s’)]$, which is nonlinear. Thus, we have identified one nice benefit of policy iteration already!

Policy Improvement

In the policy evaluation step, we determine the value of our current policy. With this value, we can then improve our policy.

Suppose we have determined the value function $V_\pi$ for a suboptimal, deterministic policy, $\pi$. By definition, then, the value taking the optimal action in a given state, $s$, would be at least as good if not better than the value of taking the action dictated by $\pi$. This inequality is given by:

\begin{align}

V^{\pi}(s) = \sum\limits_{s’}T(s,\pi(a|s),s’)[R(s,\pi(s),s’) + \gamma V^{\pi} (s’)] \leq \text{arg}\max\limits_{a \in A} \sum\limits_{s’}T(s,\pi(a|s),s’)[R(s,\pi(s),s’) + \gamma V^{\pi} (s’)], \forall s \in S

\end{align}

Thus, we should be able to find a better policy, $\pi'(s)$, by simply setting choosing the best action as given by the value function, $V^{\pi}(s)$ for our suboptimal policy, $\pi$, as given by:

\begin{align}

\pi'(s) \leftarrow \text{arg}\max\limits_{a \in A} \sum\limits_{s’} T(s’,a,s) [R(s,a,s’) + \gamma V(s’)]

\end{align}

Thus, we can replace $\pi$ with $\pi’$, and we are done improving our policy until we have a new value function estimate (i.e., by repeating the Policy Evaluation Step). The process of repeatedly computing the value function and improving policies starting from an initial policy $\pi$ (until convergence) describes policy iteration.

Just like how Value Iteration & Q-learning have their deep learning counterparts, so does our Policy Iteration algorithm.

Policy Gradient

As explained above, RL seeks to find a policy, $\pi$, that maximizes the expected, discounted, future reward given by following the actions dictated by policy, $\pi(s)$ in each state, $s$. In Policy Iteration, we first compute the value, $V^{\pi}(s)$, of each state and use these value estimates to improve the policy, $\pi$. Let $\theta$ denote the policy parameters of a neural network. We denote this policy as $\pi_{\theta}(s)$. Unlike the policy iteration method, policy gradient methods learn a parameterized policy (commonly parameterized by a neural network) to choose actions without having to rely on a table to store state-action pairs (and, arguably, without having to rely on an explicit value function; however, that depends on your formulation, which we will return to later).

With policy gradients, we seek to find the parameters, $\theta$, that maximizes the expected future reward. Hence, policy gradient methods can be formulated as a maximization problem with the objective function being the expected future reward as depicted in Equation \ref{PG_obj}, where $V^{\pi_\theta}$ is the value function for policy parameterized by $\theta$.

\begin{align}

J(\theta) \: = \mathbb{E}_{s \sim \rho(\cdot)} V^{\pi_\theta}(s) = \mathbb{E}_{s \sim \rho(\cdot), a \sim \pi(s)} \left[\sum\limits_{t=0}^\infty \gamma^t r_{t} | s_0 = s\right]

\label{PG_obj}

\end{align}

To maximize $J(\theta)$, we perform gradient ascent as shown in Equation \ref{gradient ascent}. For more details on solving optimization problems with gradient-based approaches, please see the blog post on Gradient Descent.

\begin{align}

\theta_{k+1} = \theta_k + \alpha \nabla_\theta J(\theta)

\label{gradient ascent}

\end{align}

In the case of policy gradient methods, the action probabilities change smoothly as a function of the learned parameters, $\theta$, whereas in tabular methods (e.g., Policy Iteration), the actions may change drastically even for small changes in action value function estimates. Thus, policy gradient-based methods may be more sample-efficient when this assumption holds by essentially interpolating the right action when in a new state (or interpolating the right Q-value in the case of Q-learning). However, if this smoothness property does not hold, neural network function approximation-based RL methods, e.g., Deep Q-learning or Policy Gradients, will struggle. Nonetheless, this smoothness assumption does commonly hold — at least enough — that deep learning-based methods are the mainstay of modern RL.

The Policy Gradient Theorem

(This derivation is adapted from Reinforcement Learning by Sutton & Barto)

We will now compute the gradient of the objective function $J(\theta)$ with respect to the policy parameter $\theta$. Henceforth, we will assume that the discount factor, $\gamma=1$, and $\pi$ in the derivation below represents a policy parameterized by $\theta$.

\begin{align}

\begin{split}

\nabla_\theta J(\theta) &= \nabla_\theta(V_{\pi_\theta}) \\

&= \nabla_\theta \left[\sum\limits_a \pi_\theta(a|s) Q_{\pi}(s,a)\right] \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \text{(From }\ref{Q_v})\\

&= \sum\limits_a \Bigg[\nabla_\theta \pi_\theta(a|s) Q_{\pi}(s,a) + \pi_\theta(a|s) \nabla_\theta Q_{\pi}(s,a) \Bigg] \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \text{(by product rule)}\\

&= \sum\limits_a \Bigg[\nabla_\theta \pi_\theta(a|s) Q_{\pi}(s,a) + \pi_\theta(a|s) \nabla_\theta \left[\sum\limits_{s’} T(s,a,s’)[R(s,a,s’) + V_{\pi}(s’)] \right]\Bigg]\\

&= \sum\limits_a \Bigg[\nabla_\theta \pi_\theta(a|s) Q_{\pi}(s,a) + \pi_\theta(a|s) \sum\limits_{s’} T(s,a,s’)\nabla_\theta V_{\pi}(s’) \Bigg]\\

\text{Unrolling $V_{\pi}(s’)$, we have… }\\

&= \sum\limits_a \Bigg[\nabla_\theta \pi_\theta(a|s) Q_{\pi}(s,a) + \pi_\theta(a|s) \sum\limits_{s’} T(s,a,s’) \sum\limits_{a’} \Bigg[\nabla_\theta \pi(a’|s’)Q_{\pi}(s’,a’) + \pi(a’|s’)\sum\limits_{s'{}’} T(s’,a’,s'{}’) \nabla_\theta V_{\pi}(s'{}’) \Bigg] \Bigg]\\

\end{split}

\end{align}

We can continue to unroll $V_{\pi}(s'{}’)$ and so on…Unrolling ad infinitum, we can see:

\begin{align}

\nabla_\theta J(\theta) \: = \: \sum\limits_{s \in \mathcal{S}} \sum\limits_{k=0}^{\infty}Pr(s_0 \rightarrow s, k, \pi)\sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a)

\label{PG_1}

\end{align}

Here, $Pr(s_0 \rightarrow s, k, \pi)$ is the probability of transitioning from state $s_0$ to $s$ in $k$ steps while following the policy $\pi$. Rewriting $Pr(s_0 \rightarrow s, k, \pi)$ as $\eta(s)$ in Equation \ref{PG_1}, we have:

\begin{align}

\begin{split}

\nabla_\theta J(\theta) \: &= \: \sum\limits_{s} \eta(s)\sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a)\\

&= \: \sum\limits_{s’} \eta(s’) \sum\limits_{s} \frac{\eta(s)}{\sum\limits_{s’}\eta(s’)} \sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a)\\

&= \: \sum\limits_{s’} \eta(s’) \sum\limits_{s} \mu(s) \sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a)\\

&\propto \: \sum\limits_{s} \mu(s) \sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a)\\

\end{split}

\label{PG_final}

\end{align}

We note that we set $\mu(s) = \frac{\eta(s)}{\sum\limits_{s’}\eta(s’)}$ for convenience. Now, one might ask, what about the derivation for $\gamma \neq 1$? Well, that is a great question! Candidly, we have not seen a clean derivation when $\gamma \in (0,1)$, and we would welcome any readers out there to email us should such a derivation exist that we could link to!

REINFORCE – Monte Carlo Policy Gradient

From Equation \ref{PG_final}, we find an expression proportional to the gradient. Taking a closer look at the right-hand side of Equation \ref{PG_final}, we note that it is a summation over all states, weighted by how often these states are encountered under policy $\pi$. Thus, we can re-write Equation \ref{PG_final} as an expectation:

\begin{align}

\begin{split}

\nabla_\theta J(\theta) \: &\propto \: \sum\limits_{s} \mu(s) \sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a)\\

&= \: \mathbb{E}_{s \sim \mu(\cdot)}\left[\sum\limits_a \nabla_\theta \pi_\theta(a|s) Q_\pi(s,a) \right] \\

\end{split}

\label{PG_grad}

\end{align}

We modify the gradient expression in Equation \ref{PG_grad} by (1) introducing an additional weighting factor $\pi_\theta(a|s)$ and dividing by the same without changing the equality, and (2) sampling an action from the distribution instead of summing over all actions. The modified update rule for the policy gradient algorithm is shown in Equation \ref{REINFORCE}.

\begin{align}

\begin{split}

\nabla_\theta J(\theta)\: &= \: \mathbb{E}_{s \sim \mu(\cdot)}\left[\sum\limits_a \pi_\theta(a|s) Q_\pi(s,a) \frac{\nabla_\theta \pi(a|s)}{\pi(a|s)} \right] \\

&= \: \mathbb{E}_{s \sim \mu(\cdot), a\sim \pi(\cdot|s)}\left[Q_\pi(s,a) \frac{\nabla_\theta \pi_\theta(a|s)}{\pi_\theta(a|s)} \right] \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \text{($a$ here is sampled from $\pi$)}\\

&= \: \mathbb{E}_{s \sim \mu(\cdot), a\sim \pi(\cdot|s)}\left[Q_\pi(s,a) \nabla_\theta \: \text{log}\: \: \pi_\theta(a|s) \right] \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \left(\nabla_\theta \: \text{log}\: \: \pi_\theta(a|s) = \frac{\nabla_\theta \pi_\theta(a|s)}{\pi_\theta(a|s)} \right)\\

&= \: \mathbb{E}_{s \sim \mu(\cdot), a\sim \pi(\cdot|s)}\left[G_t \nabla_\theta \: \text{log}\: \: \pi_\theta(a|s) \right] \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \; \text{($G_t$ is the return, and $\mathbb{E}_{\pi}[G_t|S_t=s, A_t=a] = Q_\pi(s,a)$)}\\

\end{split}

\label{REINFORCE}

\end{align}

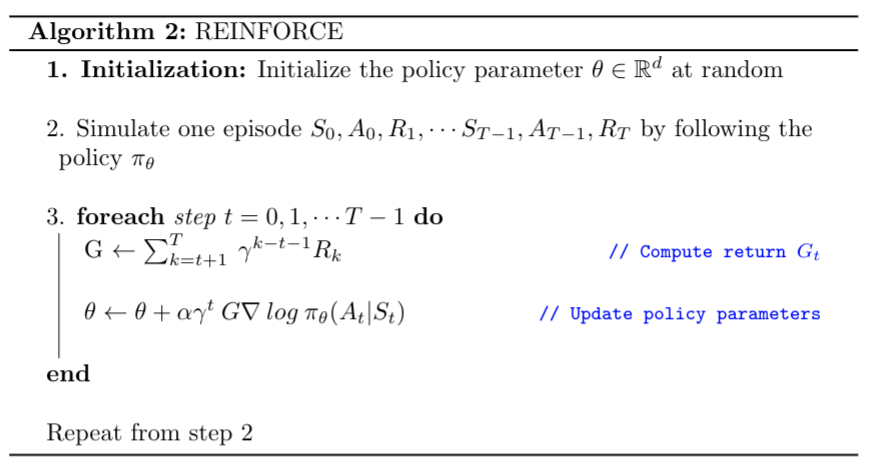

Including the logarithm creates a weighting factor based on the probability of occurrence of different state-action pairs, allowing us to leverage the expected gradient update over actions without numerically estimating the expectation! The final expression within the expectation in Equation \ref{REINFORCE}, is the quantity that can be sampled at each timestep to update the gradient. The REINFORCE update using gradient ascent is described in Equation \ref{grad_update}.

\begin{align}

\theta_{t+1} \: \dot{=} \: \theta_t + \alpha G_t \: \nabla_{\theta} \: \text{log}\: \: \pi_{\theta_t}(a|s)

\label{grad_update}

\end{align}

Fig. 2 shows the complete REINFORCE algorithm.

Returning to our aside from earlier about whether policy gradients rely on an estimate of the value function, our derivation here, as depicted in Fig. 2, does not rely on the value function. However, as our derivation alluded to, one could use the value function for $G_t = V^{\pi}(s)$. One could also use the Q-function, $G_t = Q^{\pi}(s,a)$. When setting $G$ equal to the value or Q-function, we refer to the the update as a actor-critic (AC) method. When we use the advantage function formulation, $G_t = Q^{\pi}(s,a) – V^{\pi}(s)$, the update is known as an advantage function, actor-critic (A2C) method. For AC and A2C, we need neural network function approximators to estimate the Q- and/or value functions. While adding on and learning from these additional neural networks adds computational complexity, AC and A2C often work better in practice than REINFORCE. However, that is a point that we will leave for a future blog!

Takeaways

- A policy is a mapping from states to probabilities of selecting every possible action in that state. A policy $\pi^*$ is said to be optimal if its expected return is greater than or equal to any other policy $\pi$ for all states.

- Policy Iteration is a non-parametric (i.e., tabular, exact, not deep learning-based) approach to compute the optimal policy. Policy iteration alternates between policy evaluation (computing the value function given a policy) and policy improvement (given a value function) to converge to the optimal policy.

- Policy gradients are a powerful, deep learning-based approach to learning an optimal policy with neural network function approximation for the policy.

References

Richard S. Sutton and Andrew G. Barto. 2018. Reinforcement Learning: An Introduction. A Bradford Book, Cambridge, MA, USA.