Tutorial at the International Conference on Autonomous Agents and Multi-Agent Systems 2022

Overview

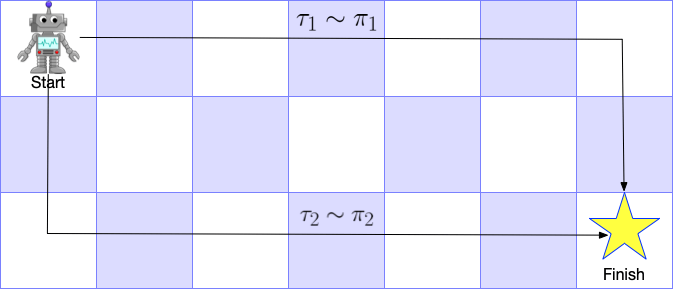

Robot teams are increasingly being deployed in environments, such as manufacturing facilities and warehouses, to save cost and improve productivity. To efficiently coordinate multi-robot teams, fast, high-quality scheduling algorithms are essential to satisfy the temporal and spatial constraints imposed by dynamic task specification and part and robot availability. Traditional solutions include exact methods, which are intractable for large-scale problems, or application-specific heuristics, which require expert domain knowledge. What is critically needed is a new, automated approach that automatically learn lightweight, application-specific coordination policies without the need for hand-engineered features.

This tutorial is an introduction to graph neural networks and a showcase of the power of graph neural networks solving multirobot coordination problems. We survey various frameworks of graph neural networks in recent literature, with a focus on their application in modeling multi-agent systems. We will introduce the multi-robot coordination (MRC) problems and review the most relevant methods available to solving MRC problems. We will discuss several successful applications of graph neural networks in MRC problems, with hands-on tutorials in the form of Python example code. With this tutorial we aim at providing an experience of employing graph neural networks in modeling multi-robot systems, from algorithm development to code implementation, thus opening up future opportunities in designing graph-based learning algorithms in broader multi-agent research.

Content

In this tutorial, we will discover the power of graph neural networks for learning effective representations of multi-robot team coordination problems. The tutorial will feature two 90-minute online sessions.

Date and Time: May 8, 11:00 – 14:00 (EDT)

- 3:00 – 6:00, May 9, Auckland Time

- 11:00 – 14:00, May 8, New York Time

- 17:00 – 20:00, May 8, Paris Time

- 20:30 – 23:30, May 8, Kolkata Time

- 23:00 – 2:00 (+1 day) May 8, Beijing Time

Zoom link: https://gatech.zoom.us/j/93966169541 [Video recordings are posted below]

Session #1 by Matthew Gombolay (11:00 – 12:30)

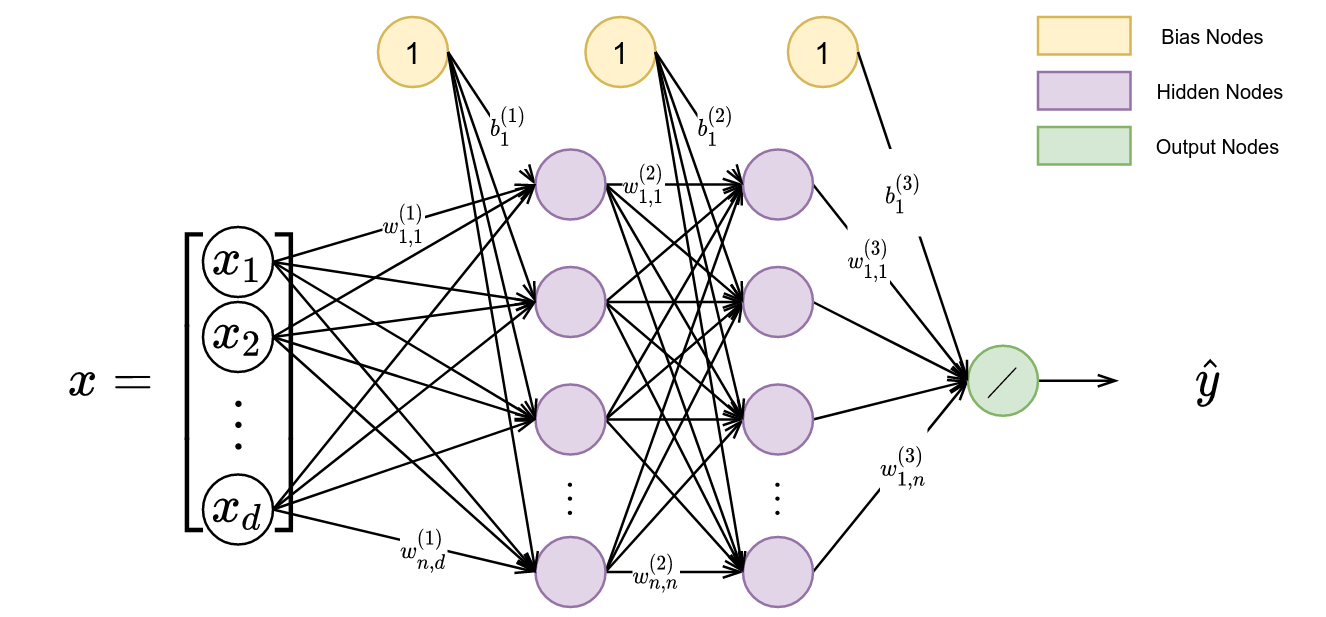

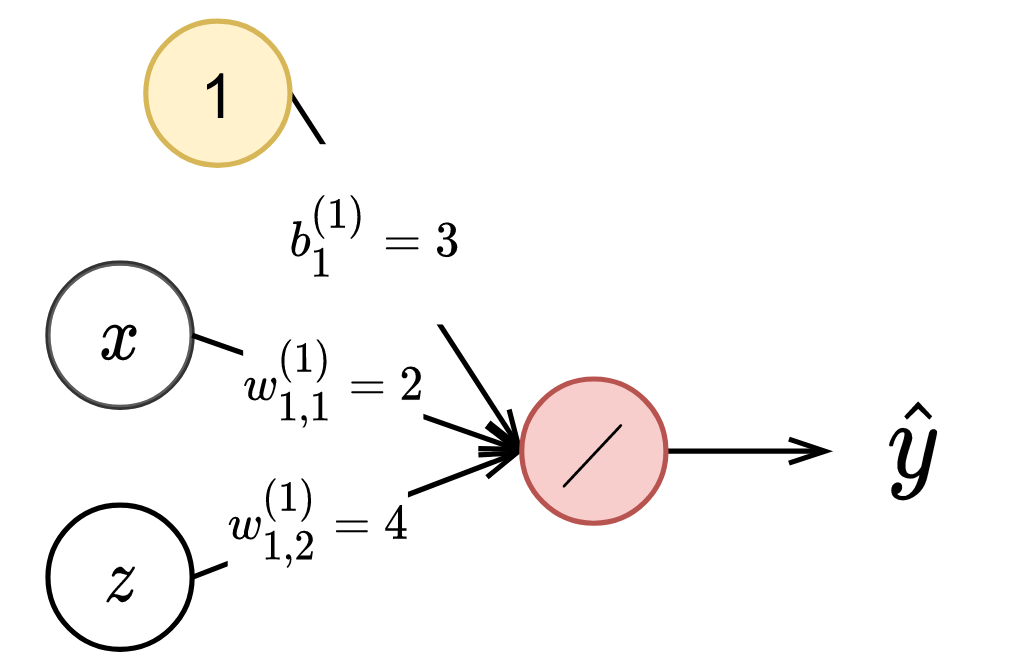

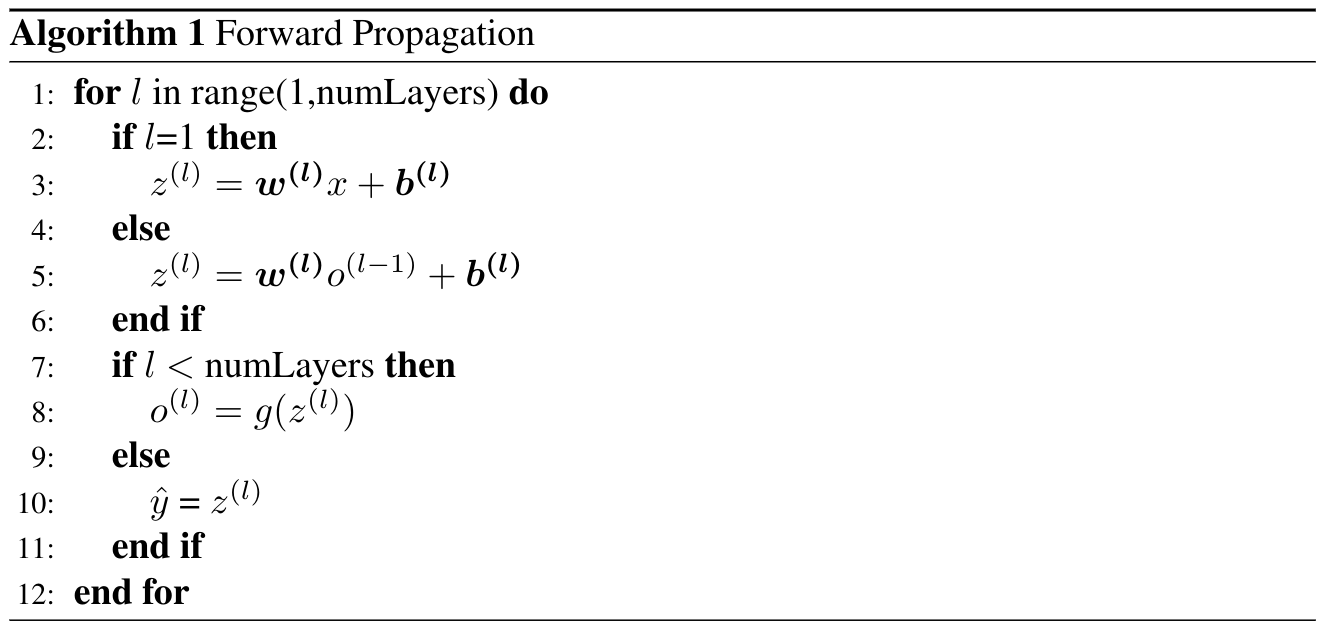

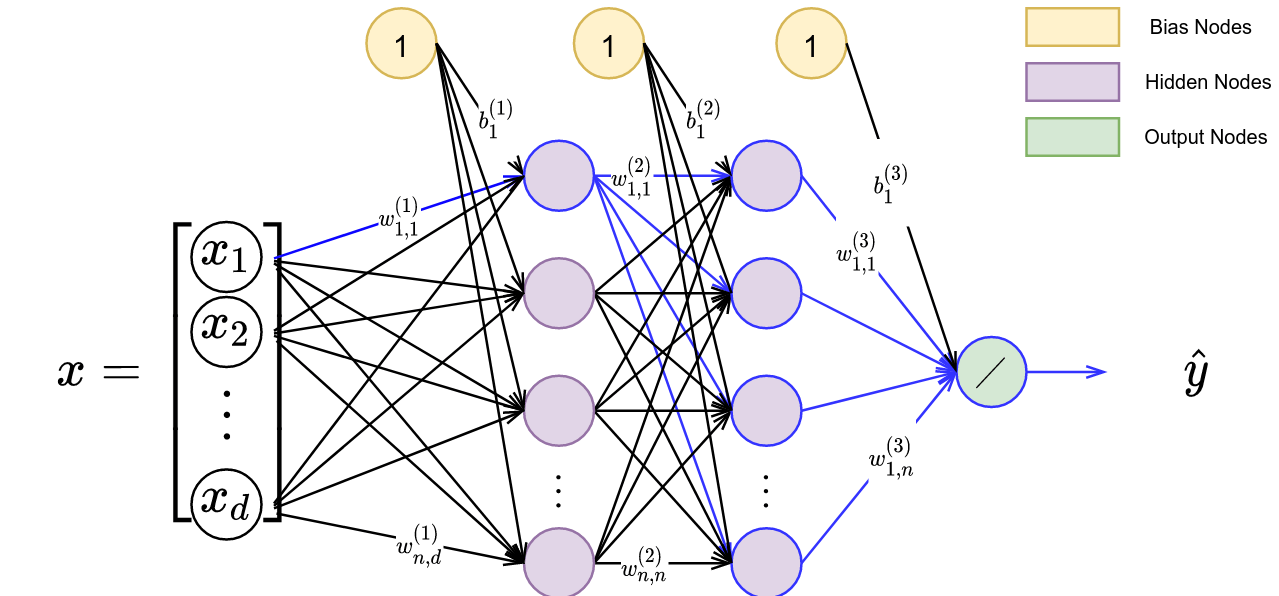

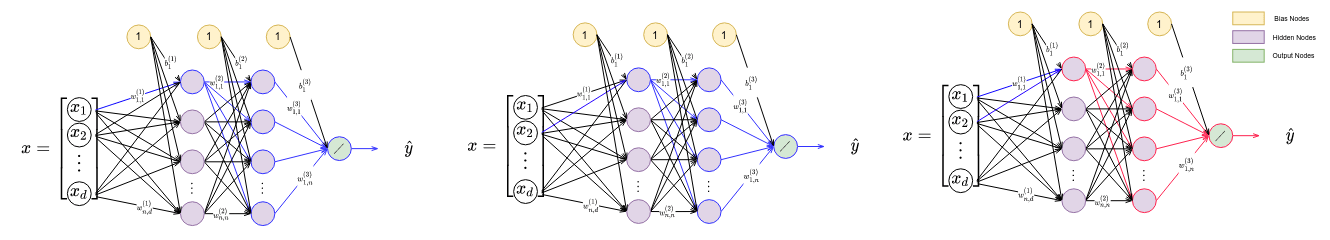

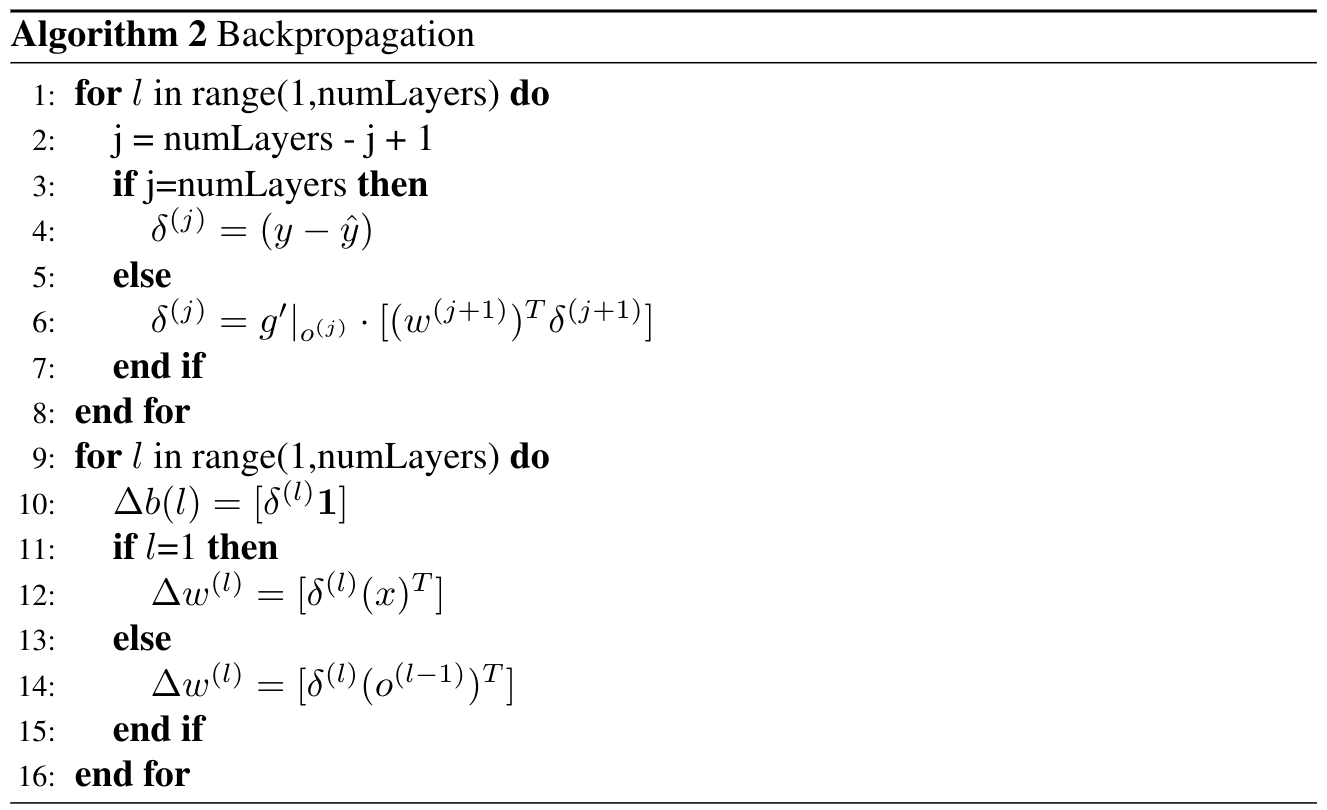

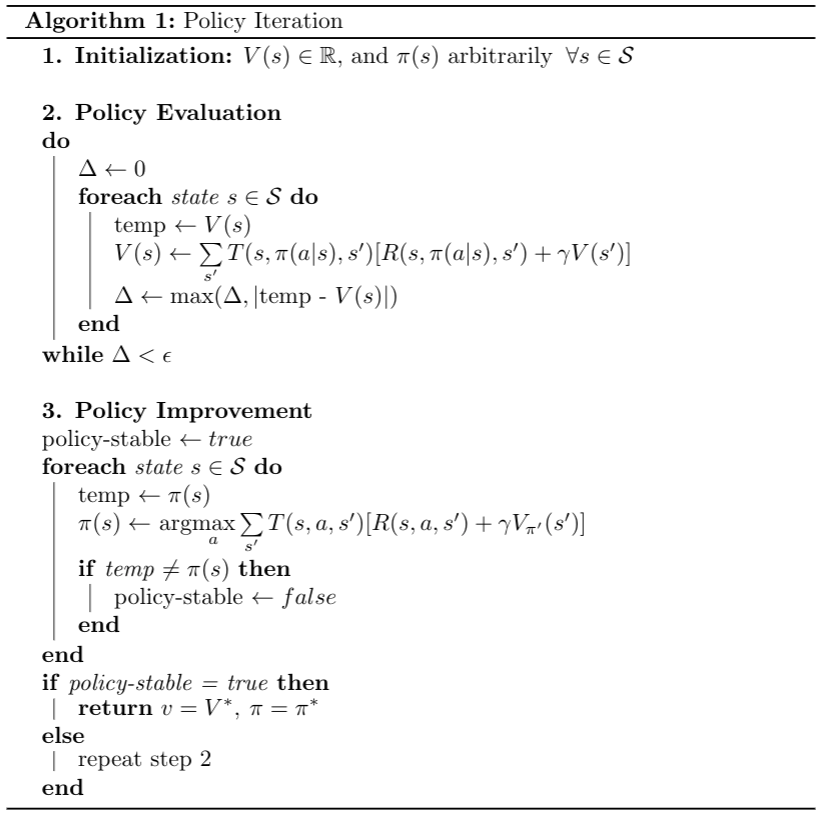

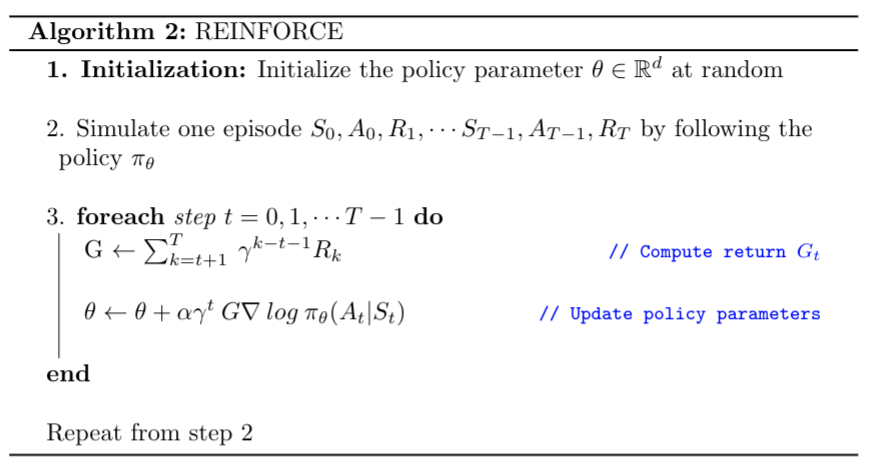

The first session will address the following: (a) How graph neural networks work – we will present a comprehensive overview of various graph neural networks proposed in prior literature, including both homogeneous and heterogeneous graphs and attention mechanisms; (b) How to model team coordination problems with graph neural networks – we will discuss what are the suitable applications that can be modeled in graph neural networks, with a focus on MRC problems; (c) How to optimize the parameters of graph neural networks for team coordination problems – we will discuss what learning methods can be used for training a graph neural network-based solver. We conclude this session with the most recurrent challenges and open questions.

Tutorial notes: [Slides]

Video:

Session #2 by Zheyuan Wang (12:30 – 14:00)

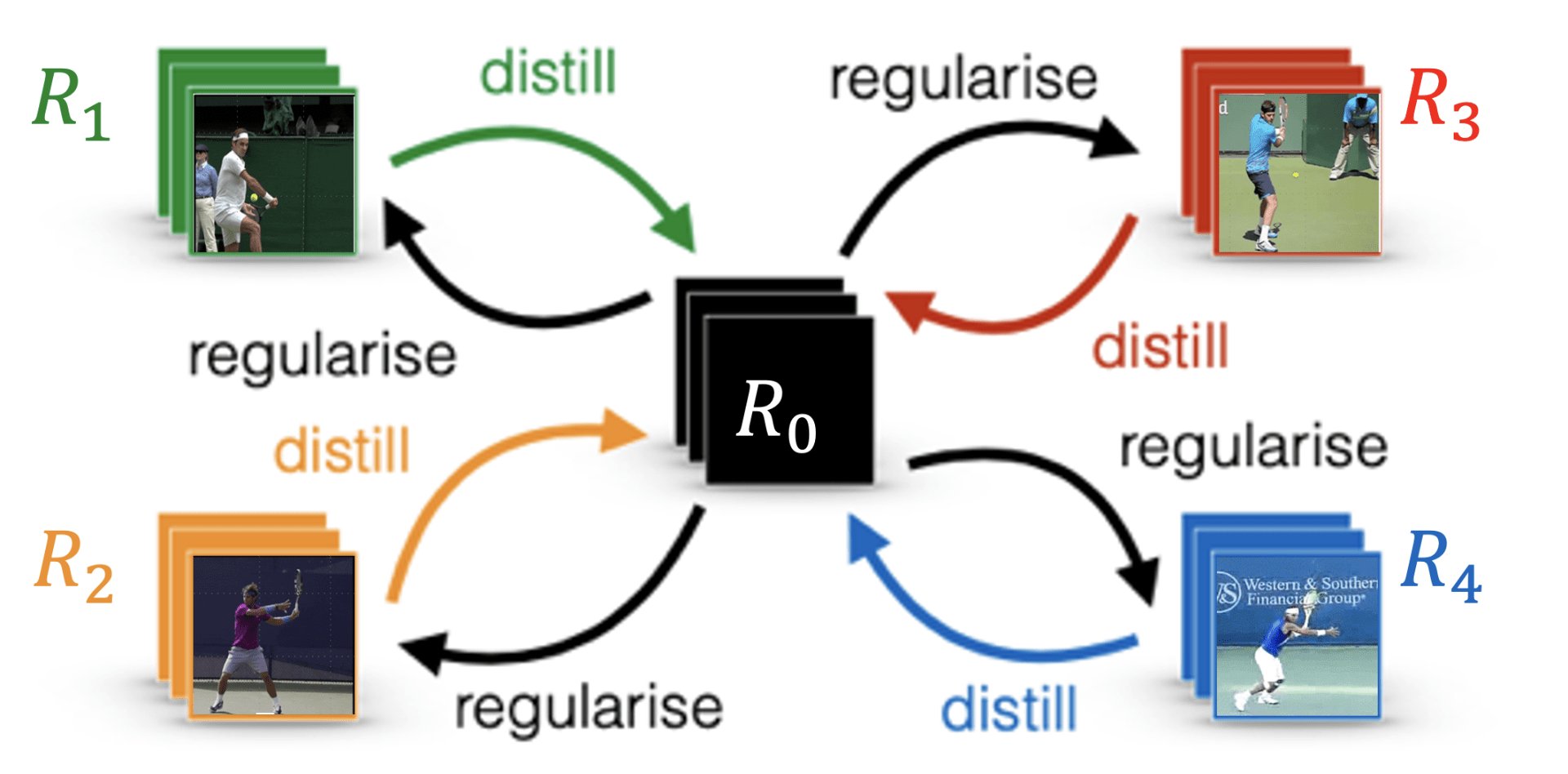

The second session will provide a hands-on tutorial for how to get up and running with graph neural networks for coordination problems, with coding examples in Python Jupyter notebook. In particular, we will look into the ScheduleNet architecture [6], a heterogenous graph neural network-based solver for MRC problems under temporal and spatial constraints. The Jupyter notebook will work through the model implementation, training and evaluation of ScheduleNet models on synthetic dataset.

Python Jupyter notebook: [Open in Google Colab] [Github]

Video:

Target Audience

The target audience is intended to be students and researchers who have a strong grasp of machine learning but who may as of yet be unfamiliar with graph neural networks. Prior knowledge of Multi-agent Reinforcement Learning (MARL) or Planning & Scheduling would be helpful but is not necessary. While the tutorial showcases the application of graph neural networks in solving multi-robot coordination problems, the methodology introduced can be utilized by the audience to broader research problems in learning representations of multi-agent systems.

Presenters

Dr. Matthew Gombolay is an Assistant Professor of Interactive Computing at the Georgia Institute of Technology. He received a B.S. in Mechanical Engineering from the Johns Hopkins University in 2011, an S.M. in Aeronautics and Astronautics from MIT in 2013, and a Ph.D. in Autonomous Systems from MIT in 2017. Gombolay’s research interests span robotics, AI/ML, human–robot interaction, and operations research. Between defending his dissertation and joining the faculty at Georgia Tech, Dr. Gombolay served as a technical staff member at MIT Lincoln Laboratory, transitioning his research to the U.S. Navy, earning him an R&D 100 Award. His publication record includes a best paper award from American Institute for Aeronautics and Astronautics, a finalist for best student paper at the American Controls Conference, and a finalist for best paper at the Conference on Robot Learning. Dr Gombolay was selected as a DARPA Riser in 2018, received 1st place for the Early Career Award from the National Fire Control Symposium, and was awarded a NASA Early Career Fellowship for increasing science autonomy in space.

Zheyuan Wang is a Ph.D. candidate in the School of Electrical and Computer Engineering (ECE) at the Georgia Institute of Technology. He received the B.S. degree and the M. E. degree, both in Electrical Engineering, from Shanghai Jiao Tong University. He also received the M.S. degree from ECE, Georgia Tech. He is currently a Graduate Research Assistant in the Cognitive Optimization and Relational (CORE) Robotics lab, led by Prof. Matthew Gombolay. His current research interests focus on graph-based policy learning that utilizes graph neural networks for representation learning and reinforcement learning for decision-making, with applications to human-robot team collaboration, multi-agent reinforcement learning and stochastic resource optimization.

Reading Materials

- Ernesto Nunes, Marie Manner, Hakim Mitiche, and Maria Gini. 2017. A taxonomy for task allocation problems with temporal and ordering constraints. Robotics and Autonomous Systems 90 (2017), 55–70.

- Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Liò, and Yoshua Bengio. 2018. Graph attention networks. International Conference on Learning Representations (2018).

- Xiao Wang, Houye Ji, Chuan Shi, Bai Wang, Yanfang Ye, Peng Cui, and Philip S Yu. 2019. Heterogeneous graph attention network. The World Wide Web Conference (2019), 2022–2032.

- Jie Zhou, Ganqu Cui, Shengding Hu, Zhengyan Zhang, Cheng Yang, Zhiyuan Liu, Lifeng Wang, Changcheng Li, and Maosong Sun. 2020. Graph neural networks: A review of methods and applications. AI Open 1 (2020), 57-81.

- Zheyuan Wang and Matthew Gombolay. 2020. Learning scheduling policies for multi-robot coordination with graph attention networks. IEEE Robotics and Automation Letters 5, 3 (2020), 4509–4516.

- Zheyuan Wang, Chen Liu, and Matthew Gombolay. 2021. Heterogeneous graph attention networks for scalable multi-robot scheduling with temporospatial constraints. Autonomous Robots (2021), 1–20.